Introduction

This is one of article of Node.js Advent Calendar 2016.

Purpose

Sometimes, I need to write Node.js batch. But, Online articles are mainly for web. So it’s difficult to find batch sample.

This article is one of my solution of how to write batch with Node.js.

Sample codes in this article are here.

Environment

- node.js : v6.9.1

- npm : 4.0.5

- Jenkins : 2.26

- And tohers

gulp.js

I run watch task of gulp when I write a batch with Node.js.

I write gulpfile with coffeescript and register ‘link’, ‘unit test’ and ‘code coverage’ tasks.

gulp = require 'gulp'

eslint = require 'gulp-eslint'

plumber = require 'gulp-plumber'

mocha = require 'gulp-mocha'

gutil = require 'gulp-util'

istanbul = require 'gulp-istanbul'

files =

src: './src/*.js'

spec: './test/*.js'

gulp.task 'lint', ->

gulp.src files.src

.pipe plumber()

.pipe eslint()

.pipe eslint.format()

.pipe eslint.failAfterError()

gulp.task 'test', ->

gulp.src files.spec

.pipe plumber()

.pipe mocha({ reporter: 'list' })

.on('error', gutil.log)

gulp.task 'pre-coverage', ->

gulp.src files.src

.pipe plumber()

.pipe(istanbul())

.pipe(istanbul.hookRequire())

gulp.task 'coverage', ['pre-coverage'], ->

gulp.src files.spec

.pipe plumber()

.pipe(mocha({reporter: "xunit-file", timeout: "5000"}))

.pipe(istanbul.writeReports('coverage'))

.pipe(istanbul.enforceThresholds({ thresholds: { global: 60 } }))

gulp.task 'watch', ->

gulp.watch files.src, ['test', 'lint']

gulp.watch files.spec, ['test']

Watch task monitors source codes and do some registered tasks when the codes are changed. In this gulpfile, watch task runs ‘unit test’ and ‘link’.

About link, I use eslint with airbnb style. But, airbnb style doesn’t care Node.js, I mean sever side, so I also add eslint-plugin-node.

{

"extends": ["airbnb", "plugin:node/recommended", "eslint:recommended"],

"plugins": ["node"],

"env": {

"node": true

},

"rules": {

}

}

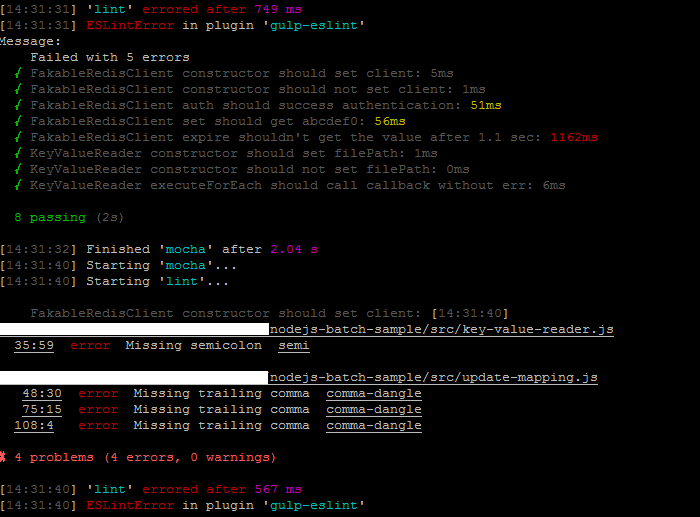

Link task result is like following.

$ ./node_modules/.bin/gulp lint

[08:57:09] Requiring external module coffee-script/register

[08:57:10] Using gulpfile ~/nodejs-batch-sample/gulpfile.coffee

[08:57:10] Starting 'lint'...

[08:57:11]

~/nodejs-batch-sample/src/redis-client.js

69:15 error Missing trailing comma comma-dangle

84:58 error Missing semicolon semi

88:21 error Strings must use singlequote quotes

91:23 error Strings must use singlequote quotes

95:34 error Expected '===' and instead saw '==' eqeqeq

98:1 error Trailing spaces not allowed no-trailing-spaces

101:4 error Missing trailing comma comma-dangle

✖ 7 problems (7 errors, 0 warnings)

[08:57:11] 'lint' errored after 768 ms

[08:57:11] ESLintError in plugin 'gulp-eslint'

Main part

In this article, I’ll show you a sample batch which read data from file and write data to Redis.

Here is entry point js.

// ===== Preparing ===============================

// Libraries

const Async = require('async');

const Log4js = require('log4js');

const Mailer = require('nodemailer');

const CommandLineArgs = require('command-line-args');

// Dependent classes

const FakableRedisClient = require('./fakable-redis-client');

const KeyValueReader = require('./key-value-reader');

// Logger

Log4js.configure('./config/logging.json');

const logger = Log4js.getLogger();

// Command line arguments

const optionDefinitions = [

{ name: 'env', alias: 'e', type: String },

{ name: 'fakeredis', alias: 'f', type: Boolean },

];

const cmdOptions = CommandLineArgs(optionDefinitions);

const targetEnv = cmdOptions.env ? cmdOptions.env : 'staging';

const fakeRedisFlg = cmdOptions.fakeredis;

logger.info('[Option]');

logger.info(' > targetEnv :', targetEnv);

logger.info(' > fakeRedisFlg :', fakeRedisFlg);

// App config

const conf = require('../config/app_config.json')[targetEnv];

if (!conf) {

logger.error('Please add', targetEnv, 'setting for app_config');

process.exit(1);

}

// Alert mail func

const transporter = Mailer.createTransport(conf.alertMail.smtpUrl);

const sendAlertMail = (err, callback) => {

const msg = err.message;

const stackStr = err.stack;

const mailOptions = {

from: conf.alertMail.from,

to: conf.alertMail.to,

subject: conf.alertMail.subject,

text: conf.alertMail.text

};

mailOptions.subject = mailOptions.subject.replace(/#{msg}/g, msg);

mailOptions.text = mailOptions.text.replace(/#{msg}/g, msg);

mailOptions.text = mailOptions.text.replace(/#{stack}/g, stackStr);

transporter.sendMail(mailOptions, (e) => {

if (e) logger.error(err);

callback();

});

};

// Function when it catches fatal error

process.on('uncaughtException', (e) => {

logger.error(e);

sendAlertMail(e, (() => { process.exit(1); }));

});

// ===== Main Logic ==============================

logger.info('START ALL');

// Initialize classes

const redisCli = new FakableRedisClient(

conf.redis.hostname,

conf.redis.port,

conf.redis.password,

logger,

fakeRedisFlg

);

const keyValueReader = new KeyValueReader(conf.keyValueFilePath);

Async.waterfall([

// 1. Redis Authentication

(callback) => {

logger.info('START Redis Authentication');

redisCli.auth(callback);

},

// 2. Insert all mapping got by 1st step

(callback) => {

logger.info('FINISH Redis Authentication');

logger.info('START Upload Key-Value Data');

// Define function

const expireSec = conf.redis.expireDay * 24 * 60 * 60;

const processFunc = (key, value, processLineNum, skipFlg) => {

const keyName = conf.redis.keyPrefix + key;

if (skipFlg) {

logger.warn(' > Skip : key=>', keyName, 'value=>', value);

} else {

if (Math.floor(Math.random() * 1000) + 1 <= 1) {

logger.info(' > Key/Value/Expire(sec) sampling(0.1%):', keyName, value, expireSec);

}

redisCli.set(keyName, value);

redisCli.expire(keyName, expireSec);

if (processLineNum % 10 === 0) logger.info(processLineNum, 'are processed...');

}

};

// Read key-value data and upload them to Redis

keyValueReader.executeForEach(processFunc, callback);

}

], (e, result) => {

logger.info('FINISH Upload Key-Value Data');

if (e) {

logger.info('Finished to insert:', result);

logger.error(e);

sendAlertMail(e, (() => { process.exit(1); }));

} else {

logger.info('Finished to insert:', result);

logger.info('FINISH ALL');

process.exit(0);

}

});

Entry point script is always same flow.

- Logger setting(library : log4js)

- Command line option analysis(library : command-line-args)

- Config file reading(just read json file)

- Alert mail setting(library : nodemailer)

- Error handling setting

- Business logic(library : async)

log4js setting file is following.

{

"appenders": [

{ "type": "console" }

]

}

And here is config file.

{

"staging" : {

"keyValueFilePath" : "./data/key-value.dat",

"redis": {

"ip": "stg-sample",

"port": 6379,

"password": "nodejs",

"keyPrefix" : "key-",

"expireDay": 1

},

"alertMail": {

"smtpUrl": "smtp://mail.sample.com:25",

"from": "from@sample.com",

"to": "to@sample.com",

"subject": "[STG][ERROR][update-mapping] #{msg} @stg-host",

"text": "[Stack Trace]\n #{msg} \n #{stack} \n[Common Trouble Shooting Doc About This Batch]\nhttp://sample-document.com/"

}

},

"production": {

"keyValueFilePath" : "./data/key-value.dat",

"redis": {

"ip": "pro-sample",

"port": 6379,

"password": "nodejs",

"keyPrefix" : "key-",

"expireDay": 7

},

"alertMail": {

"smtpUrl": "smtp://mail.sample.com:25",

"from": "from@sample.com",

"to": "to@sample.com",

"subject": "[ERROR][update-mapping] #{msg} @pro-host",

"text": "[Stack Trace]\n #{msg} \n #{stack} \n[Common Trouble Shooting Doc About This Batch]\nhttp://sample-document.com/"

}

}

}

This log4js setting is just output to stdout. And compression and rotation are done by logrotate(I didn’t put logrotate setting file to the repository).

In the sample code, business logic part is consist of FakableRedisClient class and KyeValueReader class.

For unit test, main part just call method of classes.

I’ll show you classes and its unit test from next.

FakableRedisClient class

I always write class which is easy to use mock when the class uses Redis or DBs for unit test and dry run.

You can use sinon only for unit test.

FakableRedisClient class code is following.

const Redis = require('redis');

const FakeRedis = require('fakeredis');

class FakableRedisClient {

/**

* Setting parameters and create Redis client instance

* @param {string} hostname - Redis server host name

* @param {number} port - Redis server port number

* @param {string} password - Redis server password

* @param {Object} logger - logger for error logging

* @param {boolean} fakeFlg - if it's true, use fake

*/

constructor(hostname, port, password, logger, fakeFlg) {

this.logger = logger;

this.password = password;

this.redisFactory = Redis;

this.client = undefined;

if (fakeFlg) this.redisFactory = FakeRedis;

// Validation

if (!/^[0-9a-z.-_]+$/.test(hostname)) return;

if (isNaN(port)) return;

// Create instance of redis client

try {

this.client = this.redisFactory.createClient(port, hostname);

this.client.on('error', (e) => { this.logger.error(e); });

} catch (e) {

this.logger.error(e);

this.client = undefined;

}

}

/**

* Authentication

* @param {Object} callback - callback function which is called at the end

*/

auth(callback) {

const logger = this.logger;

this.client.auth(this.password, (e) => {

if (e) {

logger.error(e);

callback(e);

} else {

callback(null);

}

});

}

/**

* Set key-value pair

* @param {string} key - key-value's key

* @param {string} value - key-value's value

*/

set(key, value) {

this.client.set(key, value);

}

/**

* Set key-value expire

* @param {string} key - key which will be set expire

* @param {number} expireSec - expire seconds

*/

expire(key, expireSec) {

this.client.expire(key, expireSec);

}

get(key, callback) {

this.client.get(key, (e, value) => {

if (e) {

this.logger.error(e);

callback(e, null);

} else {

callback(null, value);

}

});

}

}

module.exports = FakableRedisClient;

This class uses fakeredis as mock of Redis. When a argument is set, the class uses mock.

Here is unit test code. Mock flag is always on because unit test should run everywhere.

const Chai = require('chai');

const Log4js = require('log4js');

const assert = Chai.assert

const expect = Chai.expect

Chai.should()

const FakableRedisClient = require('../src/fakable-redis-client');

Log4js.configure('./test/config/logging.json');

logger = Log4js.getLogger();

// Redis setting for unit test

const hostname = 'sample.host';

const port = 6379;

const password = 'test';

const invalidHostname = '(^_^;)';

const invalidPort = 'abc';

describe('FakableRedisClient', () => {

describe('constructor', () => {

it('should set client', () => {

const client = new FakableRedisClient(hostname, port, password, logger, true);

client.password.should.equal(password);

client.client.should.not.be.undefined;

});

it('should not set client', () => {

const client1 = new FakableRedisClient(invalidHostname, port, password, logger, true);

const client2 = new FakableRedisClient(hostname, invalidPort, password, logger, true);

client1.password.should.equal(password);

expect(client1.client).equal(undefined);

client2.password.should.equal(password);

expect(client2.client).equal(undefined);

});

});

describe('auth', () => {

it('should success authentication', (done) => {

const client = new FakableRedisClient(hostname, port, password, logger, true);

client.auth((e) => {

expect(e).equal(null);

done();

});

});

});

describe('set', () => {

it('should get abcdef0', (done) => {

const testKey = 'test';

const testValue = 'abcdef0';

const client = new FakableRedisClient(hostname, port, password, logger, true);

client.set(testKey, testValue);

client.get(testKey, (e, result) => {

result.should.equal(testValue);

done();

});

});

});

describe('expire', () => {

it("shouldn't get the value after 1.1 sec", (done) => {

const testKey = 'test';

const testValue = 'abcdef0';

const expireSec = 1;

const client = new FakableRedisClient(hostname, port, password, logger, true);

client.set(testKey, testValue);

client.expire(testKey, expireSec);

setTimeout(() => {

client.get(testKey, (e, result) => {

expect(result).equal(null);

done();

});

}, 1100);

});

});

});

KeyValueReader class

Next is KeyValueReader class. This file is just read key value data from tsv file.

const Fs = require('fs');

const Lazy = require('lazy');

class KeyValueReader {

/**

* Set file path

* @param {string} filePath - set file path to read

*/

constructor(filePath) {

this.filePath = /^[0-9a-zA-Z/_.-]+$/.test(filePath) ? filePath : '';

}

/**

* Read each lines and run function

* @param {Object} processFunc - function which is called for each lines

* @param {Object} callback - function which is called at the end

*/

executeForEach(processFunc, callback) {

let processLineNum = 0;

const readStream = Fs.createReadStream(this.filePath, { bufferSize: 256 * 1024 });

try {

new Lazy(readStream).lines.forEach((line) => {

let skipFlg = false;

// Get key value

const keyValueData = line.toString().split('\t');

const key = keyValueData[0];

const value = keyValueData[1];

if (!/^[0-9a-z]+$/.test(key) || !/^[0-9a-z]+$/.test(value)) skipFlg = true;

processLineNum += !skipFlg ? 1 : 0;

processFunc(key, value, processLineNum, skipFlg);

}).on('pipe', () => { callback(null, processLineNum) });

} catch (e) {

callback(e);

}

}

}

module.exports = KeyValueReader;

I always use lazy to read file because lazy can read big file since stream reading. And for each line, the function call a function which is passed. This style is easy to write unit test and generic, I think.

const Chai = require('chai');

const Log4js = require('log4js');

const assert = Chai.assert

const expect = Chai.expect

Chai.should()

const KeyValueReader = require('../src/key-value-reader');

Log4js.configure('./test/config/logging.json');

logger = Log4js.getLogger();

const filePath = './test/data/key-value.dat';

const invalidFilePath = 'f(^o^;)';

describe('KeyValueReader', () => {

describe('constructor', () => {

it('should set filePath', () => {

const reader = new KeyValueReader(filePath);

reader.filePath.should.equal(filePath);

});

it('should not set filePath', () => {

const reader = new KeyValueReader(invalidFilePath);

reader.filePath.should.equal('');

});

});

describe('executeForEach', () => {

it('should call callback without err', (done) => {

const reader =new KeyValueReader(filePath);

const expected = [

{'key' : 'abc', 'value' : '123', 'skipFlg' : false, 'processLineNum' : 1},

{'key' : 'm(_ _)m', 'value' : 'orz', 'skipFlg' : true, 'processLineNum' : 1},

{'key' : 'def', 'value' : '456', 'skipFlg' : false, 'processLineNum' : 2},

{'key' : '012', 'value' : 'xyz', 'skipFlg' : false, 'processLineNum' : 3}

];

let counter = 0;

processFunc = (key, value, processLineNum, skipFlg) => {

key.should.equal(expected[counter]['key']);

value.should.equal(expected[counter]['value']);

skipFlg.should.equal(expected[counter]['skipFlg']);

processLineNum.should.equal(expected[counter]['processLineNum']);

counter += 1;

};

reader.executeForEach(processFunc, () => { done(); } );

});

});

});

Prepare shell script to run

I always prepare bash script when I finish development because mostly I need to set some environment variable and Node.js options.

Node.js doesn’t do GC and will be short of memory sometimes. I alwasy set optimize_for_size, max_old_space_size and gc_interval options to avoid it.

#!/bin/bash

source ~/.bashrc

nvm use v6.9.1

cd /path/to/appdir

node --optimize_for_size --max_old_space_size=8192 --gc_interval=1000 ./src/update-mapping.js --env $1 $2

Following is result of the script.

$ ./update-mapping.sh staging --fakeredis >> ./log/update-mapping.log 2>&1

$ cat ./log/update-mapping.log

Now using node v6.9.1 (npm v3.10.8)

[2016-12-22 12:24:30.234] [INFO] [default] - [Option]

[2016-12-22 12:24:30.239] [INFO] [default] - > targetEnv : staging

[2016-12-22 12:24:30.240] [INFO] [default] - > fakeRedisFlg : true

[2016-12-22 12:24:30.246] [INFO] [default] - START ALL

[2016-12-22 12:24:30.250] [INFO] [default] - START Redis Authentication

[2016-12-22 12:24:30.308] [INFO] [default] - FINISH Redis Authentication

[2016-12-22 12:24:30.308] [INFO] [default] - START Upload Key-Value Data

[2016-12-22 12:24:30.317] [WARN] [default] - > Skip : key=> key-m(_ _)m value=> orz

[2016-12-22 12:24:30.318] [INFO] [default] - 10 'are processed...'

[2016-12-22 12:24:30.322] [INFO] [default] - FINISH Upload Key-Value Data

[2016-12-22 12:24:30.322] [INFO] [default] - Finished to insert: 10

[2016-12-22 12:24:30.322] [INFO] [default] - FINISH ALL

After checking this result, I add a job to cron and set logrotate.

Code coverage on Jenkins

This is not related with the article title, but I’ll show you little bit because code coverage is one of important metrics for quality checking.

gulpfile has already code coverage task, so just run few lines code on Jenkins like following.

Add “JUnitテスト結果の集計” for unit test, “Publish HTML Reports” for coverage.

You can see reports after build.

Comment

This style is fit for me, but I still finding better way.

First time visiting your website, I like it!

Thank you!